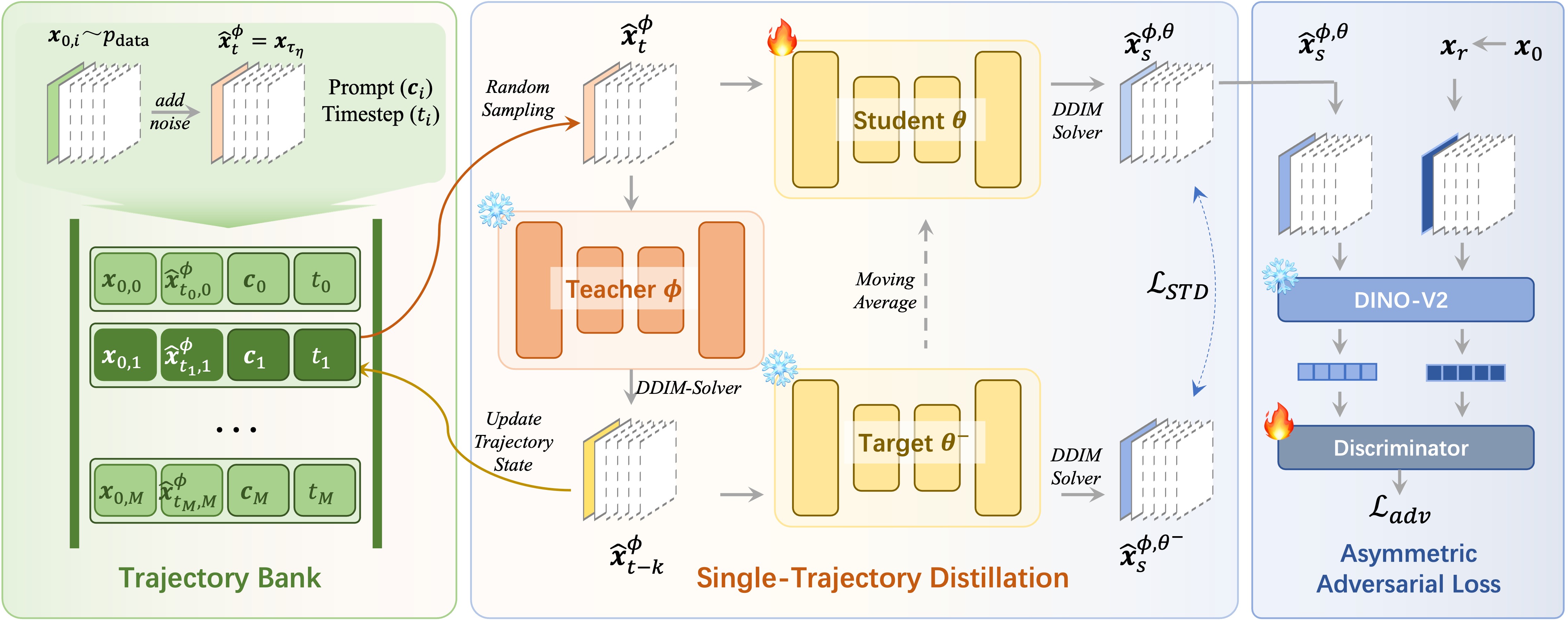

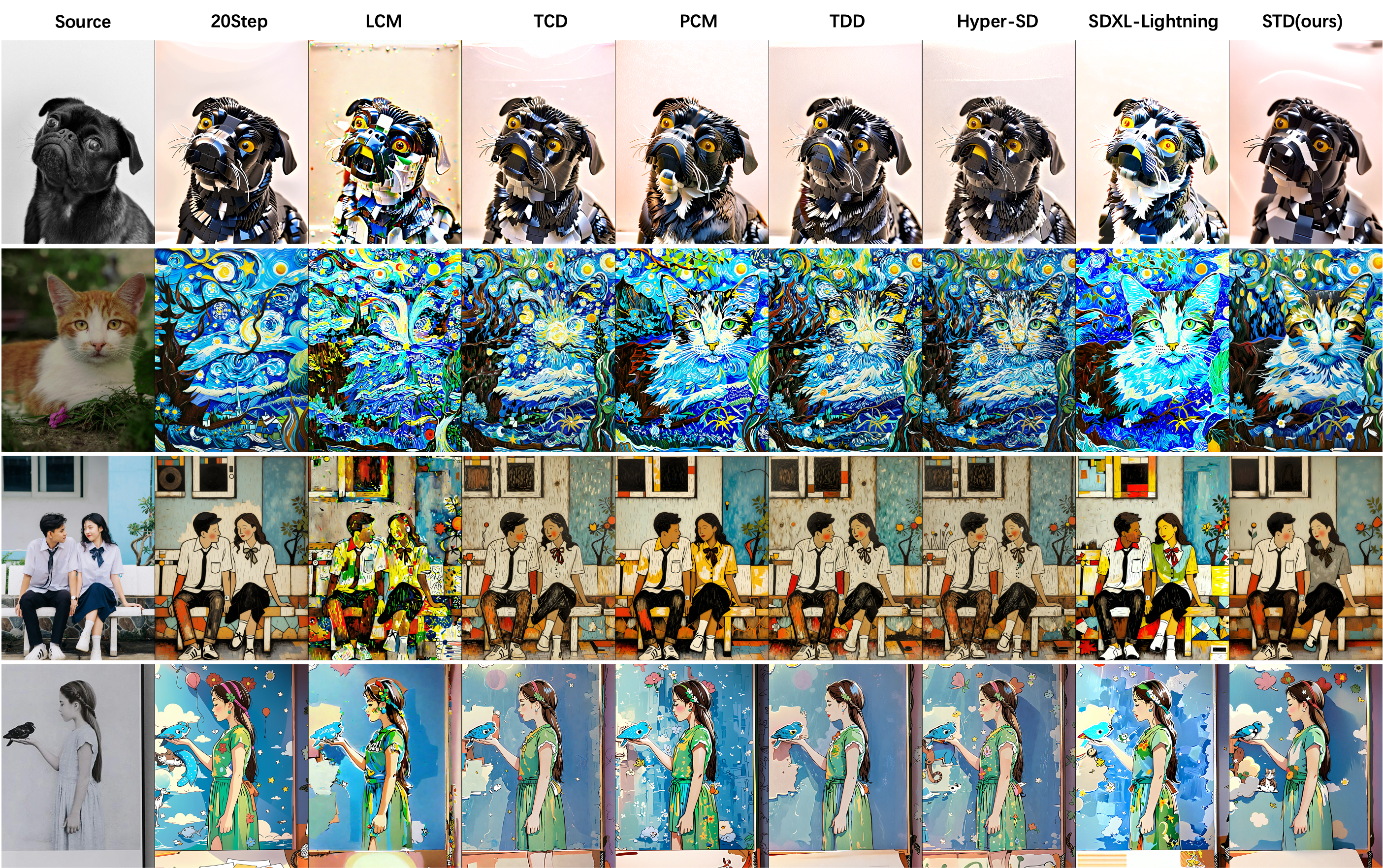

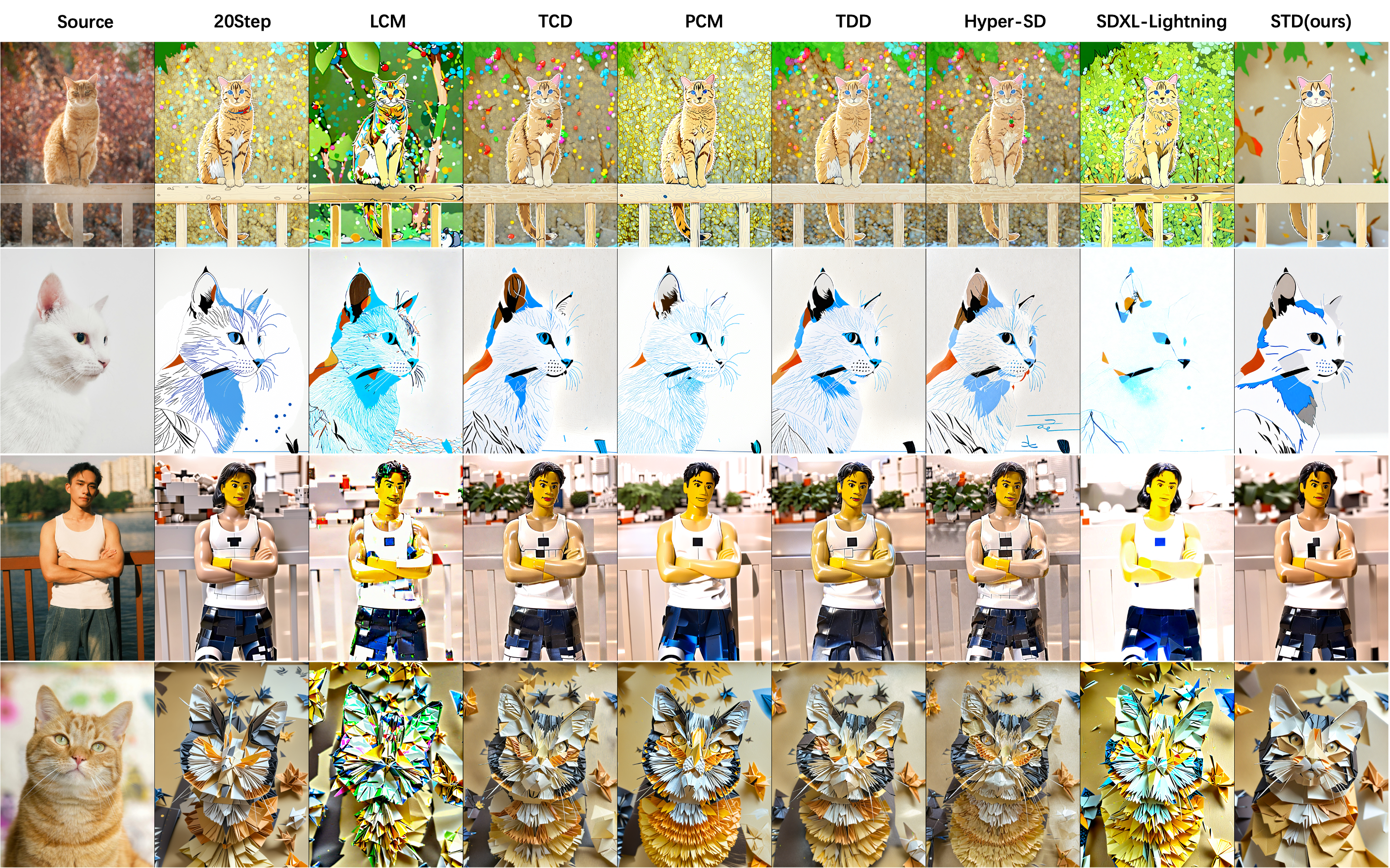

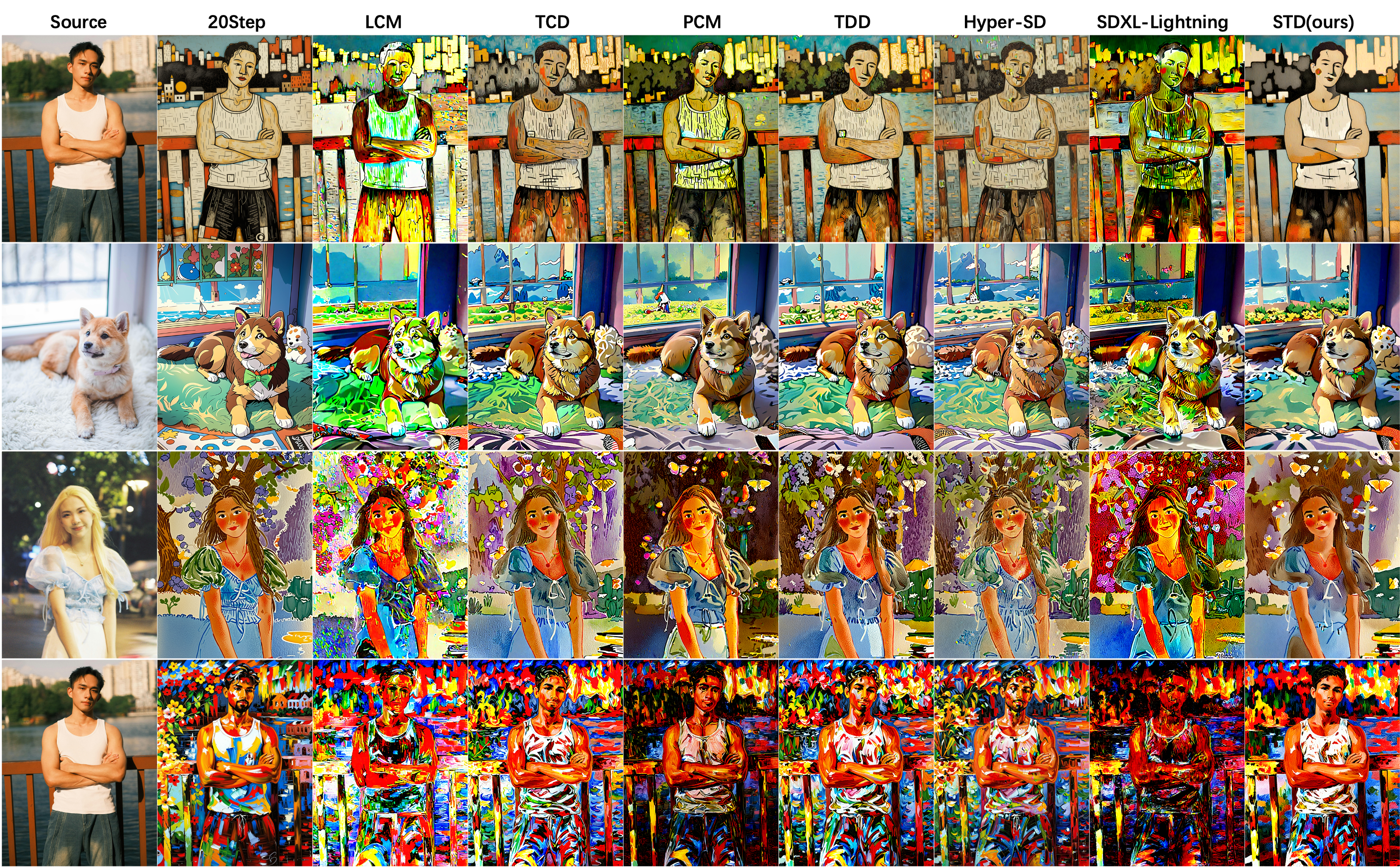

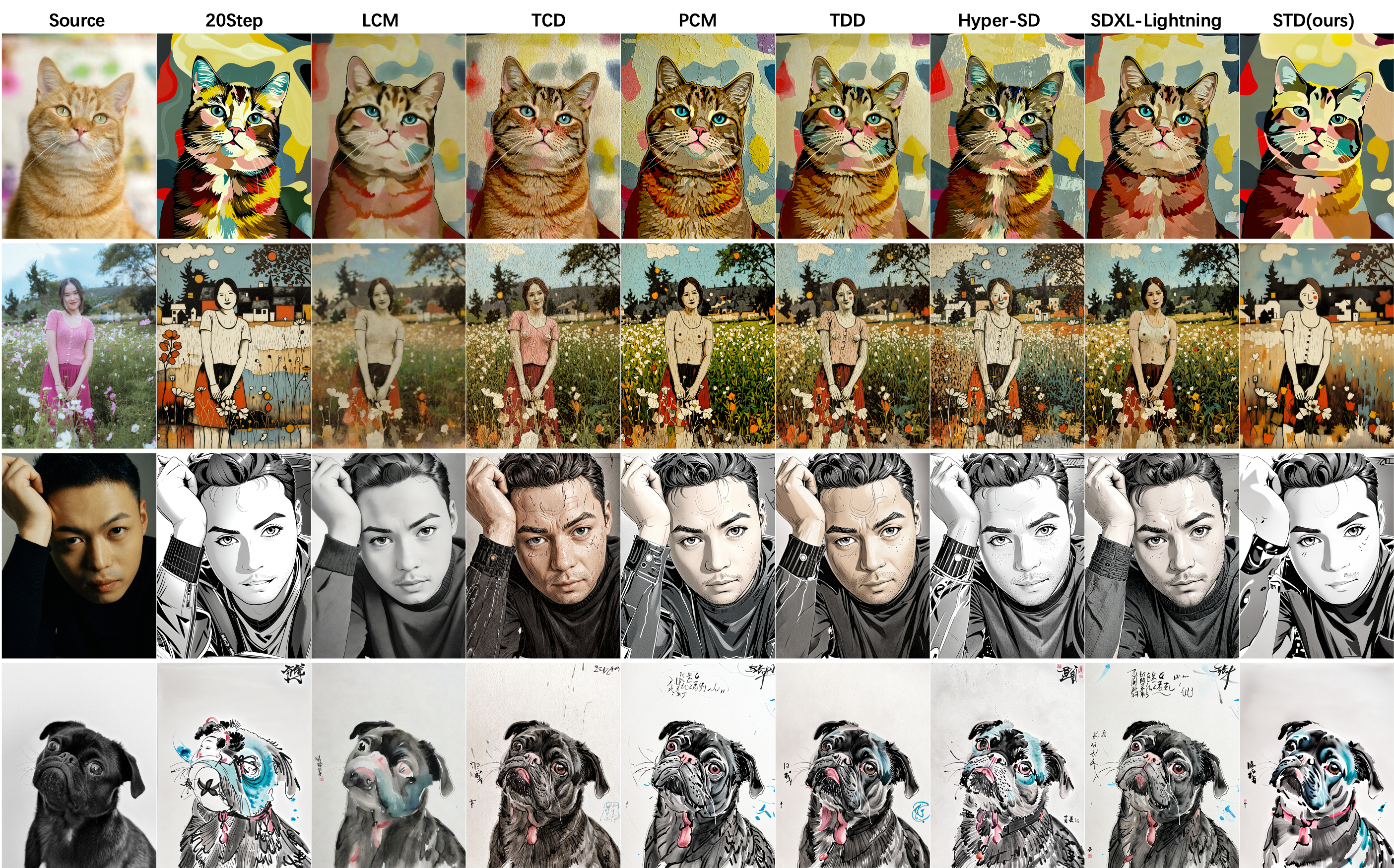

Trajectory distillation based on consistency models (CMs) provides an effective framework for accelerating diffusion models by reducing inference steps. However, we find that existing CMs degrade style similarity and compromise aesthetic quality in stylization tasks—especially when handling image-to-image or video-to-video transformations that start denoising from partially noised inputs. The core limitation stems from existing methods enforcing initial-step alignment between the probability flow ODE (PF-ODE) trajectories of student models and their imperfect teacher models. This partial alignment strategy inevitably fails to guarantee full trajectory consistency, thereby compromising the overall generation quality. To address this issue, we propose Single Trajectory Distillation (STD), a training framework initiated from partial noise states. To counteract the additional time overhead introduced by STD, we design a trajectory bank that pre-stores intermediate states of the teacher model's PF-ODE trajectories, effectively offsetting the computational cost during student model training. This mechanism ensures STD maintains equivalent training efficiency compared to conventional consistency models. Furthermore, we incorporate an asymmetric adversarial loss to explicitly enhance style consistency and perceptual quality in generated outputs. Extensive experiments on image and video stylization demonstrate that our method surpasses existing acceleration models in terms of style similarity and aesthetic evaluations.

@article{xu2024single,

title={Single Trajectory Distillation for Accelerating Image and Video Style Transfer},

author={Xu, Sijie and Wang, Runqi and Zhu, Wei and Song, Dejia and Chen, Nemo and Tang, Xu and Hu, Yao},

journal={arXiv preprint arXiv:2412.18945},

year={2024}

}